If you are interested in data science, big data analytics, or Hadoop programming and want to learn more about it, then this post is for you. On this page, you’ll find a list of the best online courses for Big Data Hadoop that are available today.

Table of Contents

Best Online Course for Big Data Hadoop

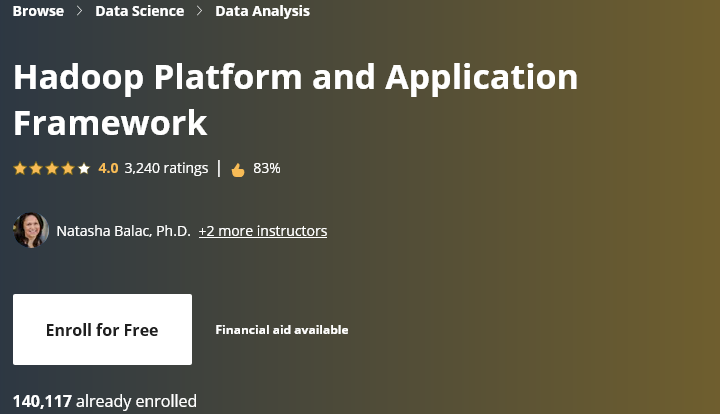

Hadoop Platform and Application Framework – UC San Diego

UC San Diego’s Introduction to the Hadoop Platform and Application Framework (View Course) is the best online course for Big Data Hadoop. This course will take you through hands-on examples with Hadoop and Spark frameworks, two of the most common distributed data science platforms in the industry. You will learn about components such as HDFS, MapReduce, YARN, Hive/HiveQL, Pig/PigLatin, and Spark SQL (Spark Core & MLlib). If you have ever wanted to know more about big data analytics then this course is the perfect place to start.

Big Data, Hadoop, and Spark Basics – IBM

IBM’s Big Data, Hadoop, and Spark Basics Course (View Course) provides a comprehensive overview of Apache Spark and teaches you how to leverage this powerful tool in your own environment. In this course, you’ll learn about different components of the Spark ecosystem and how they work together to provide a scalable, fault-tolerant distributed system for processing large datasets in parallel.

By the end of this course, you will have gained practical skills when it comes to analyzing data using PySpark and Spark SQL as well as creating streaming analytics applications using Apache Spark Streaming. You’ll also gain some valuable information on best practices for deploying these tools into production environments.

Learning Hadoop – LinkedIn Learning

Learning Hadoop from LinkedIn Learning (Free Trial) will give you everything you need to know about using this powerful technology in your organization. You’ll get started with Apache Spark and see how it works with HDFS as well as other frameworks.

This course will make it easy for you to get started with Hadoop by providing a comprehensive overview of the ecosystem, including MapReduce, Hive, Pig, and Spark. You’ll also gain hands-on experience running real-world jobs on a working cluster.

With this course, you can be up and running in just an hour. First, this course shows you how to set up your development environment so that you have everything needed for the rest of the lessons. Then it will dive into each tool separately starting with MapReduce then moving onto Hive, Pig, and Spark (including using spark machine learning libraries).

By the end of this course, you’ll have all the skills necessary to build workflows that schedule jobs on a cluster or run machine learning algorithms over large datasets stored in HDFS clusters.

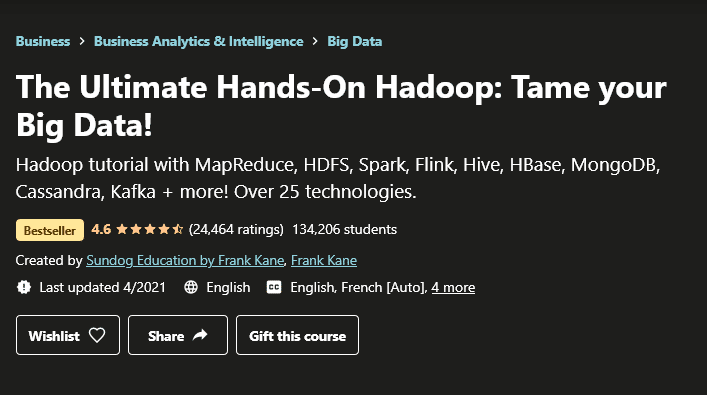

The Ultimate Hands-On Hadoop Course – Udemy

The Ultimate Hands-On Hadoop Course (View Course) is the best resource for learning Hadoop. It’s designed specifically for developers who want to get their hands dirty with coding in real-world projects using Big Data technology. You’ll leave this course knowing how to use all of the major components of Hadoop and you’ll have a strong understanding of how they work together as part of an integrated system.

This course has distilled over half a dozen different technologies into 14 hours of video lectures designed to teach you exactly what you need to know about Hadoop – no fluff or filler. And don’t worry if your background isn’t in computer science or software engineering; the instructor of this course has carefully crafted his curriculum so everyone can follow along.

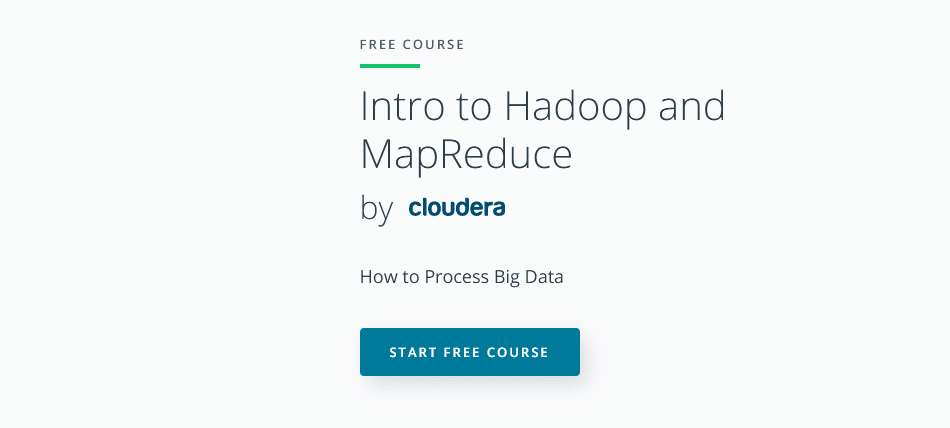

Intro to Hadoop and MapReduce –Udacity

Udacity’s Intro to Hadoop and MapReduce (Free Course) will help you master the fundamentals of Hadoop in approximately a month. You’ll learn how HDFS works as well as how MapReduce enables analyzing large datasets across multiple machines. By the end of this course, you’ll have all the tools necessary for writing your own Hadoop jobs using Python.

Hadoop and MapReduce are two of the most important technologies in Big Data today, but they can be a little intimidating at first. They’re core technologies that you’ll need to master if you want to work with big data, which means there’s a lot of information out there about them – some good, some bad.

That’s why Udacity created this course – so that even someone with no experience in either technology could understand enough about these tools by the end of it to get started using them right away.

We think Udacity offers the best online courses for learning big data because they teach students how exactly Hadoop works through real-world examples rather than just theory alone like other academic classes that focus on Hadoop.

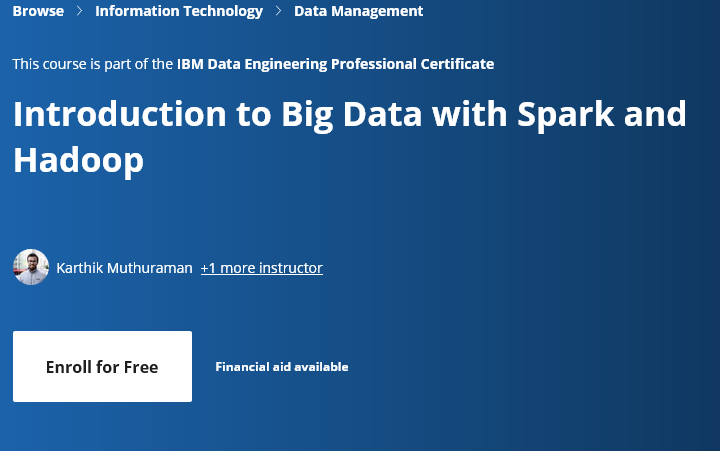

Introduction to Big Data with Spark and Hadoop – IBM

IBM’s Introduction to Big Data with Spark and Hadoop (View Course) will help you understand what makes Hadoop different from other frameworks and how it can be used for a variety of applications, including business intelligence (BI), real-time analysis, streaming analytics, and machine learning.

In this course, you’ll learn about Apache Spark as an alternative processing engine that allows for faster data processing than Hadoop MapReduce. You’ll also explore how Hive helps leverage the benefits of Big Data while overcoming some of its challenges.

By the end of this course, you will have gained an understanding of how to use various components of Hadoop such as HDFS (Hadoop Distributed File System) with Spark in order to process large datasets using simple programming models like SQL or Python scripts.

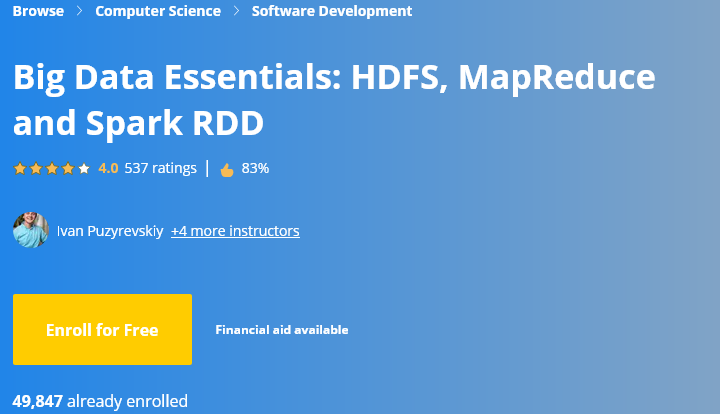

Big Data Essentials: HDFS, MapReduce and Spark RDD – Yandex

Big Data Essentials: HDFS, MapReduce, and Spark RDD by Yandex (View Course) focuses on those topics that are often left out in other courses or books about Big Data, namely distributed file systems and MapReduce frameworks.

This course also explains why we need both HDFS and Spark RDD for modern data processing tasks, thus allowing you to build a strong understanding of the whole ecosystem surrounding these concepts.

There are a lot of courses online that cover the basics of Big Data. But they either focus too much on theory or don’t provide enough details about how things work under the hood.

This course is different as it combines both aspects, providing you with an extensive theoretical background and hands-on experience in solving real-world problems using Hadoop and Spark technologies

The goal of this course is to give you practical skills for working with these technologies by showing how they can be used to solve real business tasks.

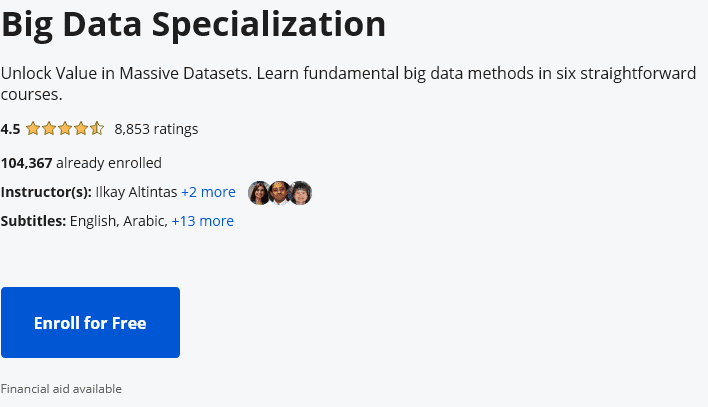

Big Data Specialization – UC San Diego

This Big Data specialization from UC San Diego (View Course) covers everything you need to know about big data analytics, including how to use tools like MapReduce, Spark, Pig, and Hive. By following along with the provided code, you’ll experience how one can perform predictive modeling and leverage graph analytics to model real-world data sets using distributed systems like Hadoop.

This specialization will prepare you to ask the right questions about your own company’s or organization’s data so that you can apply your newfound skills to make better decisions in your business.

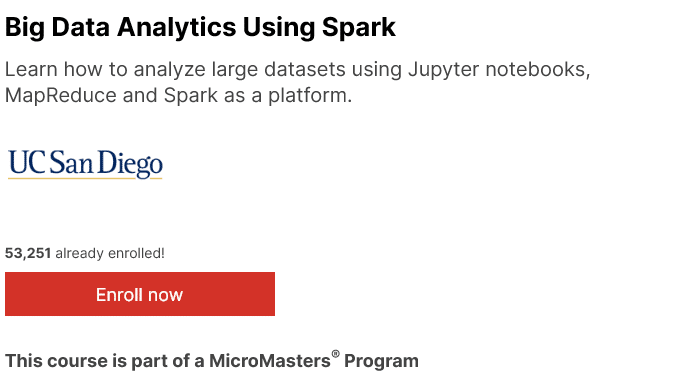

Big Data Analytics Using Spark – UC San Diego

UC San Diego’s Big Data Analytics Using Spark (View Course) will teach you all about Spark and its two most important features, MLlib and PySpark. These are tools for performing machine learning on massive datasets using Hadoop clusters with thousands of nodes.

This course will teach you how to perform supervised and unsupervised machine learning on massive datasets using the Machine Learning Library (MLlib). You will also learn how to interact with Spark from Python notebooks within Jupyter, a popular open-source tool for scientific computing.

Finally, this course will introduce some basic concepts behind distributed systems like MapReduce and Apache Yarn so that by the end of this class you have a solid foundation for working with large datasets using Spark as your computational engine.

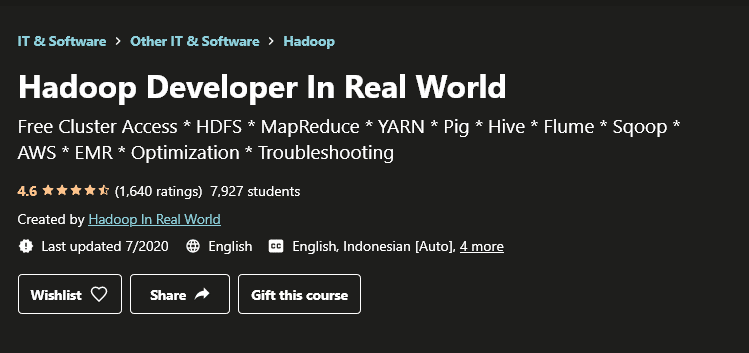

Hadoop Developer In Real World – Udemy

The Become a Hadoop Developer in the Real World Course (View Course) has been designed specifically for those who have little or no prior knowledge of big data technologies like Apache Hadoop and NoSQL databases such as MongoDB.

This course starts from scratch by covering all the basics required to become a successful big data developer including HDFS, MapReduce, YARN, etc. It then moves on to more advanced topics like custom Writables, input/output formats, and troubleshooting techniques.

Finally, this course covers some advanced concepts such as file formats (Avro), optimization techniques (Hive), and much more.

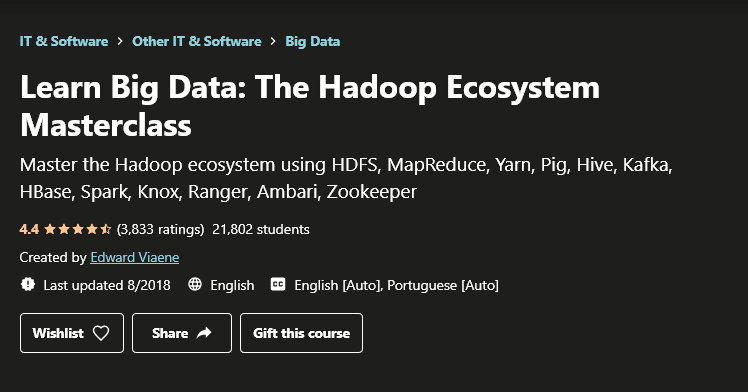

Learn Big Data: The Hadoop Ecosystem Masterclass – Udemy

The Hadoop Ecosystem Masterclass (View Course) will teach you everything you need to know about the Hortonworks Data Platform (HDP) stack and related technologies including HDFS, MapReduce, Yarn, Hive/Pig/HBase/Spark SQL/Kafka, etc.

Most of the time when people think about big data, they think of Hadoop. But there’s a lot more than just Hadoop involved in big data technology stack. There are also HDFS (Hadoop Distributed File System), Hive/Pig/Spark, and other related tools on top of Hadoop as well as security services like Ranger or Knox and monitoring tools like Ambari and Zookeeper.

The best way to learn how these individual components work together to solve real-world problems with Hortonworks Data Platform (HDP). A good way to start learning this ecosystem is by taking an online course that covers each component individually before putting them together into projects using the entire stack. This will help you get familiar with all of the parts independently before putting them together for practical applications.

Deploying a Hadoop Cluster – Udacity

Udacity’s Deploying a Hadoop Cluster (Free Course) will teach you how to deploy your own Hadoop cluster in under 3 weeks with zero prior experience using Amazon EC2 instances. This course includes hands-on exercises so you can practice what you learn and take quizzes to assess your understanding of each lesson.

This course uses Amazon Web Services (AWS) to provide hands-on learning for students interested in working with big data using AWS services such as Amazon Elastic Compute Cloud (EC2), Simple Storage Service (S3), DynamoDB, and more.

In this course, you will learn how to deploy a small Hadoop cluster on EC2 instances, use Apache Ambari to automatically deploy a larger, more powerful Hadoop cluster on EC2 within an existing VPC environment, and use Amazon’s Elastic MapReduce service to deploy a large-scale Hadoop cluster on demand that is inexpensive enough for daily analytics tasks.

Big Data Analytics with Hadoop and Apache Spark – LinkedIn Learning

Big Data Analytics with Hadoop and Apache Spark (Free Trial) will teach you how to leverage Apache Hadoop and Spark in order to build scalable analytics pipelines that work with your existing infrastructure.

In this course you’ll learn about the best ways of modeling your data on HDFS; ingesting large volumes of data into HDFS using Spark; processing this information in Spark; and optimizing all this information so you can get meaningful insights from your data. You’ll also practice what you’ve learned by building a real-world project throughout the course.

Advance Your Skills in the Hadoop/NoSQL Data Science Stack – LinkedIn Learning

Advance Your Skills in the Hadoop/NoSQL Data Science Series (Free Trial) will help you get up to speed with Big Data by teaching you how to use these tools from scratch, so even if this is your first experience with them, these classes will take it slow and make sure everything makes sense.

This series of courses will teach students how to use these powerful technologies from scratch so they can get started doing real data science work right away. There are 7 courses total in this series and in total they contain over 13 hours of video instruction taught by experts who know Hadoop and data science inside-out.